Greatest Deepseek Android/iPhone Apps

페이지 정보

본문

In comparison with Meta’s Llama3.1 (405 billion parameters used unexpectedly), DeepSeek V3 is over 10 occasions extra environment friendly but performs better. The unique model is 4-6 times more expensive yet it's 4 occasions slower. The mannequin goes head-to-head with and deepseek sometimes outperforms fashions like GPT-4o and Claude-3.5-Sonnet in various benchmarks. "Compared to the NVIDIA DGX-A100 architecture, our method using PCIe A100 achieves roughly 83% of the efficiency in TF32 and FP16 General Matrix Multiply (GEMM) benchmarks. POSTSUBSCRIPT parts. The related dequantization overhead is essentially mitigated underneath our elevated-precision accumulation course of, a vital aspect for reaching correct FP8 General Matrix Multiplication (GEMM). Over the years, I've used many developer instruments, developer productiveness tools, and general productivity tools like Notion etc. Most of those tools, have helped get better at what I needed to do, brought sanity in a number of of my workflows. With high intent matching and question understanding expertise, as a enterprise, you can get very fine grained insights into your customers behaviour with search together with their preferences so that you possibly can stock your inventory and manage your catalog in an effective means. 10. Once you are prepared, click on the Text Generation tab and enter a immediate to get began!

In comparison with Meta’s Llama3.1 (405 billion parameters used unexpectedly), DeepSeek V3 is over 10 occasions extra environment friendly but performs better. The unique model is 4-6 times more expensive yet it's 4 occasions slower. The mannequin goes head-to-head with and deepseek sometimes outperforms fashions like GPT-4o and Claude-3.5-Sonnet in various benchmarks. "Compared to the NVIDIA DGX-A100 architecture, our method using PCIe A100 achieves roughly 83% of the efficiency in TF32 and FP16 General Matrix Multiply (GEMM) benchmarks. POSTSUBSCRIPT parts. The related dequantization overhead is essentially mitigated underneath our elevated-precision accumulation course of, a vital aspect for reaching correct FP8 General Matrix Multiplication (GEMM). Over the years, I've used many developer instruments, developer productiveness tools, and general productivity tools like Notion etc. Most of those tools, have helped get better at what I needed to do, brought sanity in a number of of my workflows. With high intent matching and question understanding expertise, as a enterprise, you can get very fine grained insights into your customers behaviour with search together with their preferences so that you possibly can stock your inventory and manage your catalog in an effective means. 10. Once you are prepared, click on the Text Generation tab and enter a immediate to get began!

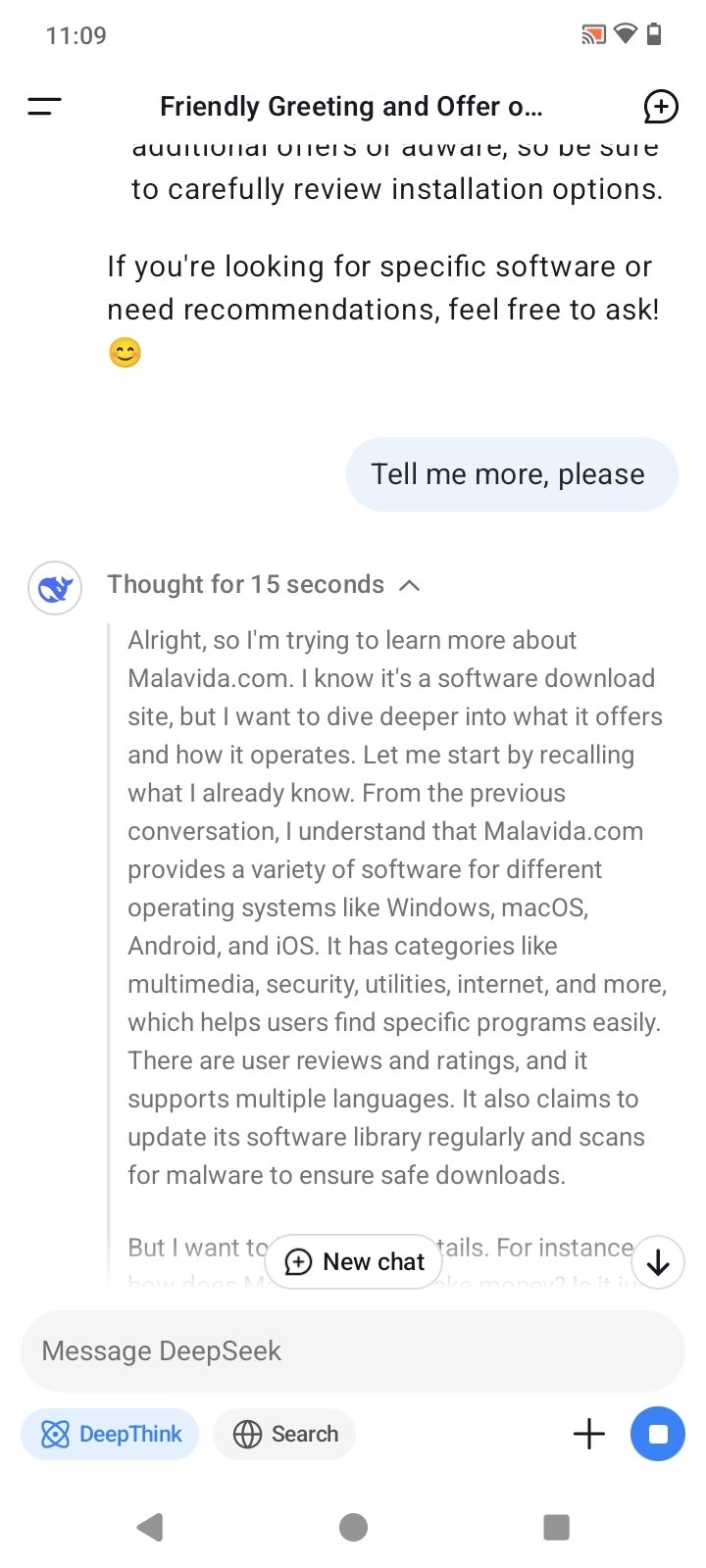

That is so you can see the reasoning process that it went by way of to ship it. Note: It's essential to note that while these models are highly effective, they'll typically hallucinate or provide incorrect data, necessitating cautious verification. While it’s praised for it’s technical capabilities, some famous the LLM has censorship points! While the mannequin has a large 671 billion parameters, it solely makes use of 37 billion at a time, making it extremely efficient. 1. Click the Model tab. 9. If you would like any custom settings, set them after which click on Save settings for this mannequin followed by Reload the Model in the highest right. 8. Click Load, and the mannequin will load and is now prepared to be used. The expertise of LLMs has hit the ceiling with no clear reply as to whether the $600B funding will ever have affordable returns. In assessments, the strategy works on some relatively small LLMs however loses power as you scale up (with GPT-four being harder for it to jailbreak than GPT-3.5). Once it reaches the target nodes, we'll endeavor to make sure that it is instantaneously forwarded via NVLink to specific GPUs that host their goal experts, without being blocked by subsequently arriving tokens.

4. The model will begin downloading. Once it is completed it should say "Done". The most recent in this pursuit is DeepSeek Chat, from China’s DeepSeek AI. Open-sourcing the brand new LLM for public research, DeepSeek AI proved that their DeepSeek Chat is much better than Meta’s Llama 2-70B in numerous fields. Depending on how much VRAM you will have on your machine, you might have the ability to benefit from Ollama’s ability to run a number of models and handle multiple concurrent requests by utilizing DeepSeek Coder 6.7B for autocomplete and Llama 3 8B for chat. The very best speculation the authors have is that people advanced to consider relatively easy things, like following a scent in the ocean (and then, ultimately, on land) and this kind of work favored a cognitive system that would take in an enormous quantity of sensory knowledge and compile it in a massively parallel approach (e.g, how we convert all the knowledge from our senses into representations we can then focus attention on) then make a small variety of choices at a much slower charge.

If you liked this article and you also would like to get more info relating to ديب سيك i implore you to visit our site.

- 이전글9 Things Your Parents Taught You About Boarding Up Windows Near Me 25.02.01

- 다음글15 Gifts For Your Nissan Keys Lover In Your Life 25.02.01

댓글목록

등록된 댓글이 없습니다.