6 Questions It's good to Ask About Deepseek

페이지 정보

본문

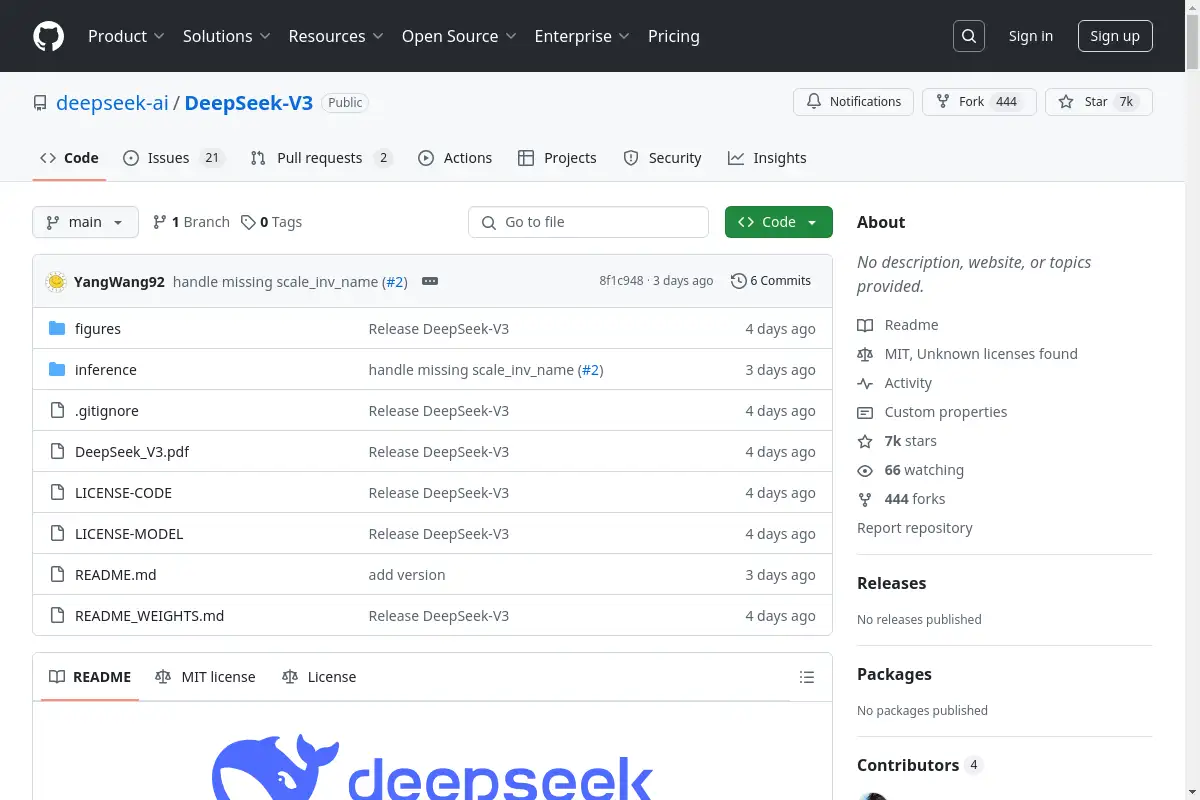

DeepSeek 모델 패밀리의 면면을 한 번 살펴볼까요? Like many other Chinese AI fashions - Baidu's Ernie or Doubao by ByteDance - DeepSeek is trained to avoid politically delicate questions. Its efficiency is comparable to leading closed-supply models like GPT-4o and Claude-Sonnet-3.5, narrowing the hole between open-source and closed-supply fashions on this area. 2) On coding-related duties, DeepSeek-V3 emerges as the top-performing mannequin for coding competition benchmarks, akin to LiveCodeBench, solidifying its position as the main model on this domain. 2) For factuality benchmarks, DeepSeek-V3 demonstrates superior efficiency amongst open-supply fashions on both SimpleQA and Chinese SimpleQA. Notably, it even outperforms o1-preview on specific benchmarks, similar to MATH-500, demonstrating its strong mathematical reasoning capabilities. • Knowledge: (1) On educational benchmarks akin to MMLU, MMLU-Pro, and GPQA, DeepSeek-V3 outperforms all different open-supply models, achieving 88.5 on MMLU, 75.9 on MMLU-Pro, and 59.1 on GPQA. In-depth evaluations have been conducted on the bottom and chat fashions, evaluating them to existing benchmarks. Despite its economical coaching prices, comprehensive evaluations reveal that DeepSeek-V3-Base has emerged as the strongest open-supply base mannequin presently out there, particularly in code and math.

The rule-based reward mannequin was manually programmed. In the remainder of this paper, we first current an in depth exposition of our DeepSeek-V3 model structure (Section 2). Subsequently, we introduce our infrastructures, encompassing our compute clusters, the coaching framework, the support for FP8 training, the inference deployment strategy, and our strategies on future hardware design. Then, we current a Multi-Token Prediction (MTP) training objective, which we've got noticed to enhance the overall efficiency on evaluation benchmarks. Secondly, DeepSeek-V3 employs a multi-token prediction training objective, which we have noticed to boost the overall performance on analysis benchmarks. It has been nice for overall ecosystem, nevertheless, fairly difficult for individual dev to catch up! However, with LiteLLM, using the identical implementation format, you need to use any model provider (Claude, Gemini, Groq, Mistral, Azure AI, Bedrock, and many others.) as a drop-in replacement for OpenAI models. • At an economical value of solely 2.664M H800 GPU hours, we full the pre-coaching of DeepSeek-V3 on 14.8T tokens, producing the currently strongest open-supply base mannequin. During pre-coaching, we practice DeepSeek-V3 on 14.8T excessive-quality and numerous tokens.

The rule-based reward mannequin was manually programmed. In the remainder of this paper, we first current an in depth exposition of our DeepSeek-V3 model structure (Section 2). Subsequently, we introduce our infrastructures, encompassing our compute clusters, the coaching framework, the support for FP8 training, the inference deployment strategy, and our strategies on future hardware design. Then, we current a Multi-Token Prediction (MTP) training objective, which we've got noticed to enhance the overall efficiency on evaluation benchmarks. Secondly, DeepSeek-V3 employs a multi-token prediction training objective, which we have noticed to boost the overall performance on analysis benchmarks. It has been nice for overall ecosystem, nevertheless, fairly difficult for individual dev to catch up! However, with LiteLLM, using the identical implementation format, you need to use any model provider (Claude, Gemini, Groq, Mistral, Azure AI, Bedrock, and many others.) as a drop-in replacement for OpenAI models. • At an economical value of solely 2.664M H800 GPU hours, we full the pre-coaching of DeepSeek-V3 on 14.8T tokens, producing the currently strongest open-supply base mannequin. During pre-coaching, we practice DeepSeek-V3 on 14.8T excessive-quality and numerous tokens.

China’s DeepSeek staff have built and released free deepseek-R1, a model that uses reinforcement learning to train an AI system to be ready to make use of test-time compute. Furthermore, we meticulously optimize the reminiscence footprint, making it potential to train DeepSeek-V3 without using costly tensor parallelism. Through the support for FP8 computation and storage, we obtain both accelerated training and lowered GPU reminiscence utilization. We profile the peak memory usage of inference for 7B and 67B models at totally different batch size and sequence length settings. In the primary stage, the maximum context length is extended to 32K, and within the second stage, it's additional prolonged to 128K. Following this, we conduct submit-coaching, together with Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) on the base mannequin of DeepSeek-V3, to align it with human preferences and further unlock its potential. Combined with 119K GPU hours for the context length extension and 5K GPU hours for put up-training, DeepSeek-V3 prices only 2.788M GPU hours for its full training.

Next, we conduct a two-stage context length extension for DeepSeek-V3. I believe succeeding at Nethack is incredibly hard and requires an excellent long-horizon context system as well as an capacity to infer quite complicated relationships in an undocumented world. Success in NetHack calls for both long-time period strategic planning, since a winning game can contain a whole bunch of 1000's of steps, in addition to brief-term techniques to combat hordes of monsters". This paper presents a brand new benchmark called CodeUpdateArena to evaluate how properly massive language models (LLMs) can replace their knowledge about evolving code APIs, a vital limitation of present approaches. Lately, Large Language Models (LLMs) have been undergoing fast iteration and evolution (OpenAI, 2024a; Anthropic, 2024; Google, 2024), progressively diminishing the gap in the direction of Artificial General Intelligence (AGI). That is why the world’s most powerful models are either made by massive company behemoths like Facebook and Google, or by startups that have raised unusually massive amounts of capital (OpenAI, Anthropic, XAI).

- 이전글Франко (2023) смотреть фильм 25.02.01

- 다음글The Top Companies Not To Be In The Asbestos Defense Attorney Industry 25.02.01

댓글목록

등록된 댓글이 없습니다.