We Asked ChatGPT: Ought To Schools Ban You?

페이지 정보

본문

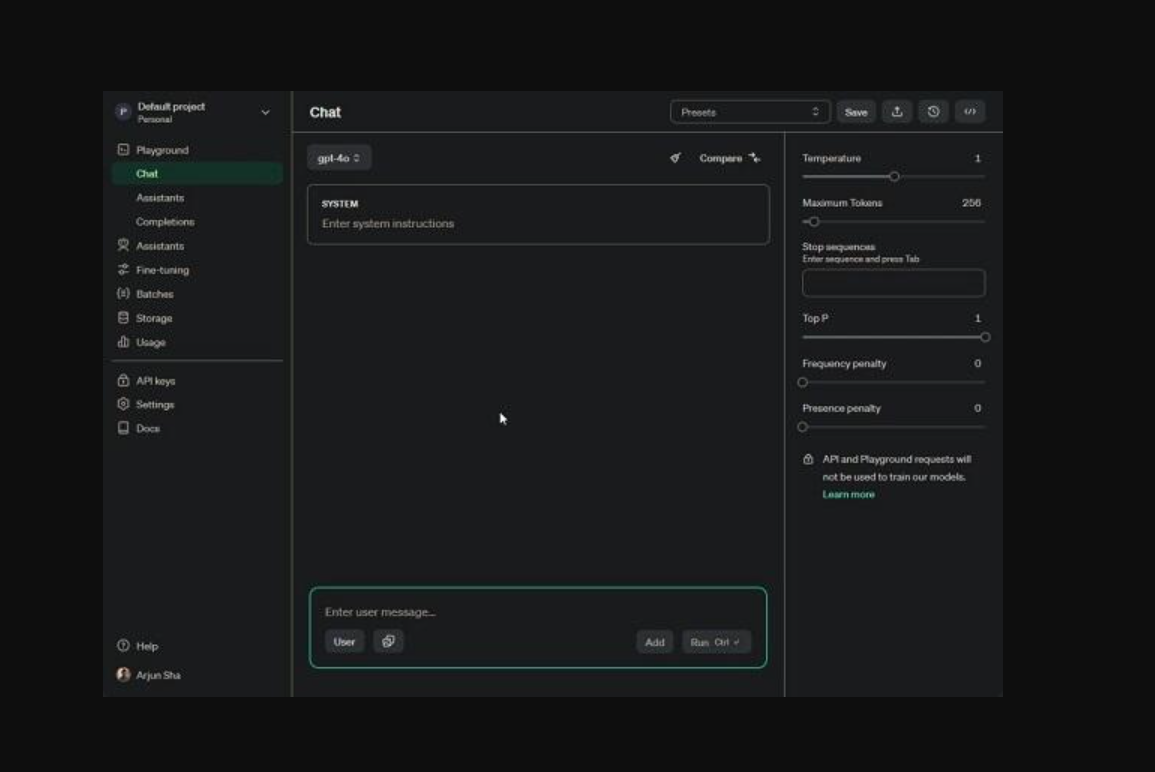

We're additionally beginning to roll out to chatgpt gratis Free with usage limits in the present day. Fine-tuning prompts and optimizing interactions with language fashions are crucial steps to attain the desired habits and enhance the efficiency of AI models like chatgpt gratis. In this chapter, we explored the varied techniques and strategies to optimize immediate-primarily based models for enhanced performance. Techniques for Continual Learning − Techniques like Elastic Weight Consolidation (EWC) and Knowledge Distillation enable continuous studying by preserving the data acquired from previous prompts while incorporating new ones. Continual Learning for Prompt Engineering − Continual learning enables the model to adapt and be taught from new knowledge with out forgetting earlier knowledge. Pre-training and switch learning are foundational concepts in Prompt Engineering, which involve leveraging current language fashions' knowledge to effective-tune them for specific duties. These strategies assist prompt engineers find the optimal set of hyperparameters for the precise job or domain. Context Window Size − Experiment with completely different context window sizes in multi-flip conversations to find the optimum balance between context and mannequin capacity.

If you find this undertaking revolutionary and precious, I would be extremely grateful for your vote in the competitors. Reward Models − Incorporate reward models to advantageous-tune prompts utilizing reinforcement studying, encouraging the era of desired responses. Chatbots and Virtual Assistants − Optimize prompts for chatbots and virtual assistants to provide helpful and context-aware responses. User Feedback − Collect person feedback to grasp the strengths and weaknesses of the model's responses and refine immediate design. Techniques for Ensemble − Ensemble methods can contain averaging the outputs of multiple models, utilizing weighted averaging, or combining responses utilizing voting schemes. Top-p Sampling (Nucleus Sampling) − Use prime-p sampling to constrain the model to consider solely the highest probabilities for token technology, resulting in more targeted and coherent responses. Uncertainty Sampling − Uncertainty sampling is a standard active studying strategy that selects prompts for effective-tuning based mostly on their uncertainty. Dataset Augmentation − Expand the dataset with further examples or variations of prompts to introduce range and robustness during superb-tuning. Policy Optimization − Optimize the model's habits utilizing coverage-based reinforcement learning to achieve extra correct and contextually appropriate responses. Content Filtering − Apply content material filtering to exclude particular varieties of responses or to make sure generated content adheres to predefined tips.

If you find this undertaking revolutionary and precious, I would be extremely grateful for your vote in the competitors. Reward Models − Incorporate reward models to advantageous-tune prompts utilizing reinforcement studying, encouraging the era of desired responses. Chatbots and Virtual Assistants − Optimize prompts for chatbots and virtual assistants to provide helpful and context-aware responses. User Feedback − Collect person feedback to grasp the strengths and weaknesses of the model's responses and refine immediate design. Techniques for Ensemble − Ensemble methods can contain averaging the outputs of multiple models, utilizing weighted averaging, or combining responses utilizing voting schemes. Top-p Sampling (Nucleus Sampling) − Use prime-p sampling to constrain the model to consider solely the highest probabilities for token technology, resulting in more targeted and coherent responses. Uncertainty Sampling − Uncertainty sampling is a standard active studying strategy that selects prompts for effective-tuning based mostly on their uncertainty. Dataset Augmentation − Expand the dataset with further examples or variations of prompts to introduce range and robustness during superb-tuning. Policy Optimization − Optimize the model's habits utilizing coverage-based reinforcement learning to achieve extra correct and contextually appropriate responses. Content Filtering − Apply content material filtering to exclude particular varieties of responses or to make sure generated content adheres to predefined tips.

Content Moderation − Fine-tune prompts to ensure content material generated by the mannequin adheres to neighborhood tips and ethical requirements. Importance of Hyperparameter Optimization − Hyperparameter optimization includes tuning the hyperparameters of the prompt-based mostly model to achieve the very best efficiency. Importance of Ensembles − Ensemble techniques combine the predictions of multiple models to provide a more strong and correct remaining prediction. Importance of regular Evaluation − Prompt engineers should recurrently evaluate and monitor the efficiency of immediate-primarily based fashions to establish areas for improvement and measure the impact of optimization strategies. Incremental Fine-Tuning − Gradually positive-tune our prompts by making small adjustments and analyzing model responses to iteratively improve performance. Maximum Length Control − Limit the utmost response size to keep away from overly verbose or irrelevant responses. Transformer Architecture − Pre-training of language models is usually accomplished using transformer-primarily based architectures like GPT (Generative Pre-skilled Transformer) or BERT (Bidirectional Encoder Representations from Transformers). We’ve been using this wonderful shortcut by Yue Yang all weekend, our Features Editor, Daryl, even used ChatGPT by Siri to help with completing Metroid Prime Remastered. These strategies assist enrich the immediate dataset and result in a more versatile language mannequin. "Notably, the language modeling checklist contains extra education-associated occupations, indicating that occupations in the sphere of training are more likely to be comparatively extra impacted by advances in language modeling than other occupations," the study reported.

This has enabled the tool to study and process language across different kinds and subjects. chatgpt gratis proves to be an invaluable device for a wide range of SQL-associated tasks. Automate routine tasks such as emailing with this expertise whereas sustaining a human-like engagement degree. Balanced Complexity − Strive for a balanced complexity degree in prompts, avoiding overcomplicated instructions or excessively simple tasks. Bias Detection and Analysis − Detecting and analyzing biases in prompt engineering is essential for creating honest and inclusive language models. Applying energetic learning strategies in immediate engineering can result in a extra efficient collection of prompts for high-quality-tuning, decreasing the necessity for giant-scale data assortment. Data augmentation, energetic studying, ensemble techniques, and continuous learning contribute to creating extra robust and adaptable prompt-based language models. By nice-tuning prompts, adjusting context, sampling methods, and controlling response length, we can optimize interactions with language models to generate more correct and contextually relevant outputs.

This has enabled the tool to study and process language across different kinds and subjects. chatgpt gratis proves to be an invaluable device for a wide range of SQL-associated tasks. Automate routine tasks such as emailing with this expertise whereas sustaining a human-like engagement degree. Balanced Complexity − Strive for a balanced complexity degree in prompts, avoiding overcomplicated instructions or excessively simple tasks. Bias Detection and Analysis − Detecting and analyzing biases in prompt engineering is essential for creating honest and inclusive language models. Applying energetic learning strategies in immediate engineering can result in a extra efficient collection of prompts for high-quality-tuning, decreasing the necessity for giant-scale data assortment. Data augmentation, energetic studying, ensemble techniques, and continuous learning contribute to creating extra robust and adaptable prompt-based language models. By nice-tuning prompts, adjusting context, sampling methods, and controlling response length, we can optimize interactions with language models to generate more correct and contextually relevant outputs.

If you have any sort of inquiries regarding where and just how to make use of chatgpt español sin registro, you can contact us at our web site.

- 이전글The Hob Awards: The Best, Worst, And The Most Unlikely Things We've Seen 25.01.30

- 다음글25 Amazing Facts About Autofold Mobility Scooter 25.01.30

댓글목록

등록된 댓글이 없습니다.