Warning: What Can you Do About Deepseek Right Now

페이지 정보

본문

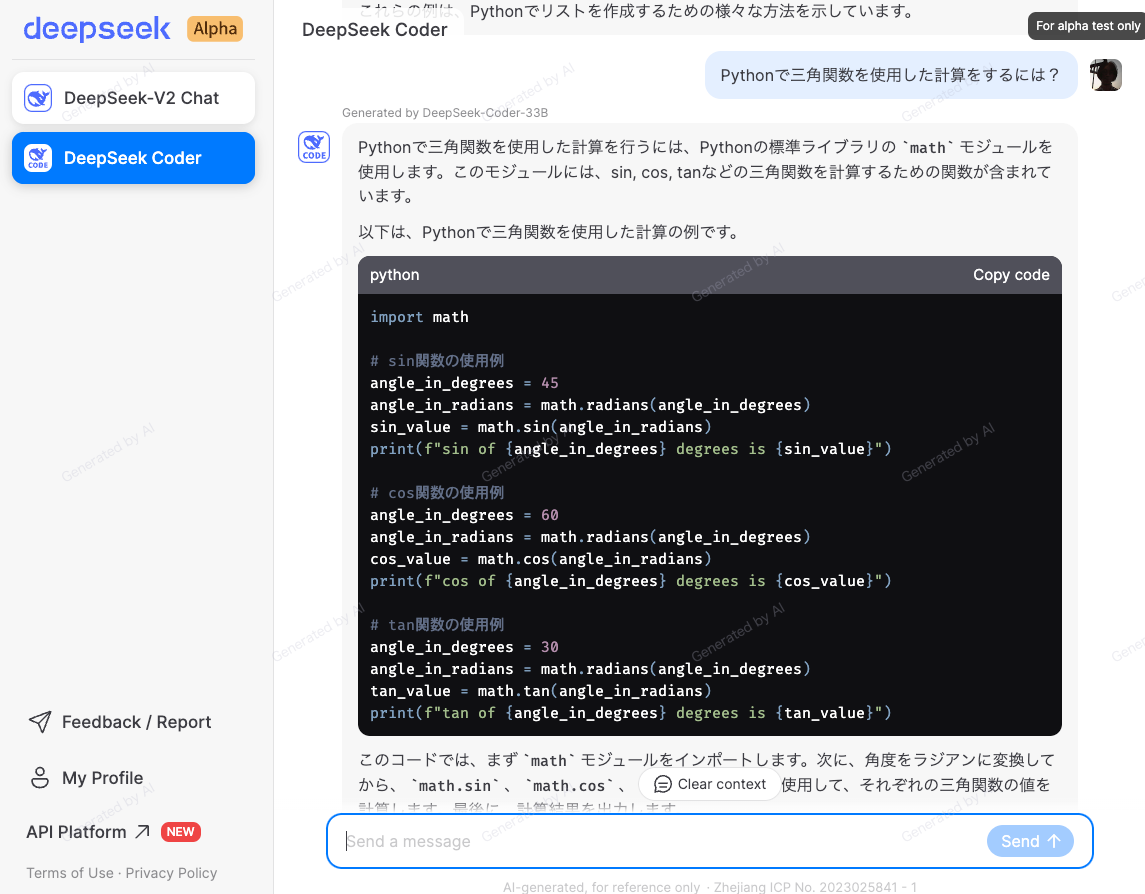

They do lots much less for publish-coaching alignment right here than they do for Deepseek LLM. Optim/LR follows Deepseek LLM. It is obvious that DeepSeek LLM is an advanced language model, that stands on the forefront of innovation. So after I found a model that gave fast responses in the best language. Comprising the DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat - these open-supply fashions mark a notable stride forward in language comprehension and versatile software. Deepseek’s official API is suitable with OpenAI’s API, so just need so as to add a new LLM underneath admin/plugins/discourse-ai/ai-llms. Because it performs better than Coder v1 && LLM v1 at NLP / Math benchmarks. Despite being worse at coding, they state that DeepSeek-Coder-v1.5 is better. So with every thing I read about fashions, I figured if I might find a model with a really low quantity of parameters I may get something price using, but the thing is low parameter rely ends in worse output. To facilitate seamless communication between nodes in both A100 and H800 clusters, we make use of InfiniBand interconnects, identified for his or her excessive throughput and low latency.

These GPUs are interconnected using a mixture of NVLink and NVSwitch technologies, making certain environment friendly data switch within nodes. Risk of biases because DeepSeek-V2 is skilled on vast amounts of data from the web. In our numerous evaluations around quality and latency, DeepSeek-V2 has proven to provide the most effective mix of each. So I danced by means of the fundamentals, every learning section was one of the best time of the day and every new course part felt like unlocking a new superpower. The key contributions of the paper include a novel approach to leveraging proof assistant feedback and developments in reinforcement studying and search algorithms for theorem proving. The DeepSeek-Coder-V2 paper introduces a big advancement in breaking the barrier of closed-supply models in code intelligence. Paper abstract: 1.3B to 33B LLMs on 1/2T code tokens (87 langs) w/ FiM and 16K seqlen. Like Deepseek-LLM, they use LeetCode contests as a benchmark, the place 33B achieves a Pass@1 of 27.8%, better than 3.5 again. On 1.3B experiments, they observe that FIM 50% typically does higher than MSP 50% on each infilling && code completion benchmarks. They also discover proof of knowledge contamination, as their mannequin (and GPT-4) performs higher on issues from July/August. The researchers evaluated their model on the Lean four miniF2F and FIMO benchmarks, which contain hundreds of mathematical issues.

These GPUs are interconnected using a mixture of NVLink and NVSwitch technologies, making certain environment friendly data switch within nodes. Risk of biases because DeepSeek-V2 is skilled on vast amounts of data from the web. In our numerous evaluations around quality and latency, DeepSeek-V2 has proven to provide the most effective mix of each. So I danced by means of the fundamentals, every learning section was one of the best time of the day and every new course part felt like unlocking a new superpower. The key contributions of the paper include a novel approach to leveraging proof assistant feedback and developments in reinforcement studying and search algorithms for theorem proving. The DeepSeek-Coder-V2 paper introduces a big advancement in breaking the barrier of closed-supply models in code intelligence. Paper abstract: 1.3B to 33B LLMs on 1/2T code tokens (87 langs) w/ FiM and 16K seqlen. Like Deepseek-LLM, they use LeetCode contests as a benchmark, the place 33B achieves a Pass@1 of 27.8%, better than 3.5 again. On 1.3B experiments, they observe that FIM 50% typically does higher than MSP 50% on each infilling && code completion benchmarks. They also discover proof of knowledge contamination, as their mannequin (and GPT-4) performs higher on issues from July/August. The researchers evaluated their model on the Lean four miniF2F and FIMO benchmarks, which contain hundreds of mathematical issues.

Capabilities: Mixtral is a sophisticated AI model utilizing a Mixture of Experts (MoE) architecture. This produced the Instruct mannequin. I assume @oga wants to use the official Deepseek API service as an alternative of deploying an open-supply mannequin on their very own. Some GPTQ purchasers have had points with models that use Act Order plus Group Size, however this is generally resolved now. I don’t get "interconnected in pairs." An SXM A100 node ought to have eight GPUs linked all-to-all over an NVSwitch. The solutions you may get from the 2 chatbots are very similar. The callbacks have been set, and the occasions are configured to be sent into my backend. They have solely a single small section for SFT, where they use a hundred step warmup cosine over 2B tokens on 1e-5 lr with 4M batch measurement. Meta has to make use of their monetary advantages to shut the gap - this can be a risk, however not a given.

I would love to see a quantized model of the typescript model I exploit for an additional performance enhance. On AIME math issues, efficiency rises from 21 percent accuracy when it uses lower than 1,000 tokens to 66.7 p.c accuracy when it makes use of greater than 100,000, surpassing o1-preview’s performance. Other non-openai code models on the time sucked compared to DeepSeek-Coder on the tested regime (basic problems, library utilization, leetcode, infilling, small cross-context, math reasoning), and particularly suck to their fundamental instruct FT. DeepSeek-Coder-Base-v1.5 model, regardless of a slight lower in coding efficiency, shows marked improvements across most tasks when in comparison with the DeepSeek-Coder-Base mannequin. 4. They use a compiler & high quality mannequin & heuristics to filter out garbage. To prepare one of its newer fashions, the company was pressured to make use of Nvidia H800 chips, a much less-highly effective model of a chip, the H100, out there to U.S. The prohibition of APT beneath the OISM marks a shift within the U.S. They mention possibly using Suffix-Prefix-Middle (SPM) at the beginning of Section 3, but it isn't clear to me whether they actually used it for their fashions or not. I began by downloading Codellama, Deepseeker, and Starcoder but I found all the models to be fairly sluggish not less than for code completion I wanna point out I've gotten used to Supermaven which specializes in quick code completion.

In case you beloved this informative article and also you would like to acquire more info relating to ديب سيك i implore you to go to our own web site.

- 이전글Герцогиня (2024) смотреть фильм 25.02.01

- 다음글5 Must-Know Testing For ADHD In Adults Practices For 2023 25.02.01

댓글목록

등록된 댓글이 없습니다.