Wish To Know More About Deepseek?

페이지 정보

본문

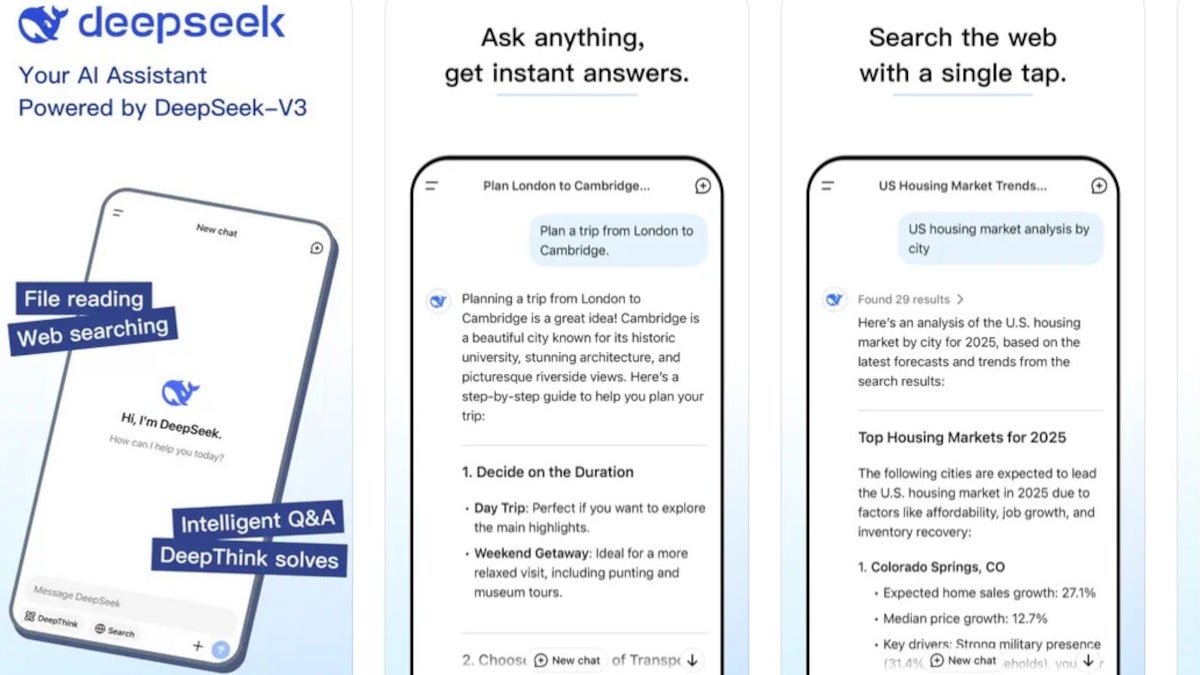

For the final week, I’ve been utilizing deepseek ai china V3 as my every day driver for regular chat duties. DeepSeek-Coder-Base-v1.5 mannequin, regardless of a slight lower in coding performance, exhibits marked enhancements across most duties when in comparison with the DeepSeek-Coder-Base model. Among the noteworthy enhancements in DeepSeek’s coaching stack include the next. Concerns over knowledge privacy and safety have intensified following the unprotected database breach linked to the DeepSeek AI programme, exposing sensitive consumer data. Giving everyone entry to highly effective AI has potential to result in security considerations including nationwide safety issues and general consumer safety. Please don't hesitate to report any issues or contribute ideas and code. Common practice in language modeling laboratories is to use scaling laws to de-danger ideas for pretraining, deepseek so that you just spend very little time coaching at the largest sizes that do not lead to working models. Flexing on how much compute you might have entry to is common apply among AI companies.

For the final week, I’ve been utilizing deepseek ai china V3 as my every day driver for regular chat duties. DeepSeek-Coder-Base-v1.5 mannequin, regardless of a slight lower in coding performance, exhibits marked enhancements across most duties when in comparison with the DeepSeek-Coder-Base model. Among the noteworthy enhancements in DeepSeek’s coaching stack include the next. Concerns over knowledge privacy and safety have intensified following the unprotected database breach linked to the DeepSeek AI programme, exposing sensitive consumer data. Giving everyone entry to highly effective AI has potential to result in security considerations including nationwide safety issues and general consumer safety. Please don't hesitate to report any issues or contribute ideas and code. Common practice in language modeling laboratories is to use scaling laws to de-danger ideas for pretraining, deepseek so that you just spend very little time coaching at the largest sizes that do not lead to working models. Flexing on how much compute you might have entry to is common apply among AI companies.

Translation: In China, national leaders are the widespread alternative of the people. In case you have a lot of money and you have a variety of GPUs, you'll be able to go to the most effective folks and say, "Hey, why would you go work at a company that actually can not give you the infrastructure you could do the work you need to do? For Chinese corporations which might be feeling the stress of substantial chip export controls, it can't be seen as particularly stunning to have the angle be "Wow we will do approach more than you with much less." I’d in all probability do the identical of their shoes, it is much more motivating than "my cluster is greater than yours." This goes to say that we want to know how essential the narrative of compute numbers is to their reporting. Lower bounds for compute are important to understanding the progress of technology and peak effectivity, but with out substantial compute headroom to experiment on massive-scale models DeepSeek-V3 would by no means have existed.

It is a scenario OpenAI explicitly wants to keep away from - it’s better for them to iterate shortly on new fashions like o3. It’s exhausting to filter it out at pretraining, particularly if it makes the mannequin better (so you might want to show a blind eye to it). The truth that the model of this quality is distilled from DeepSeek’s reasoning mannequin series, R1, makes me more optimistic about the reasoning mannequin being the true deal. To get a visceral sense of this, take a look at this post by AI researcher Andrew Critch which argues (convincingly, imo) that plenty of the hazard of Ai techniques comes from the actual fact they may think lots faster than us. Many of those details were shocking and intensely unexpected - highlighting numbers that made Meta look wasteful with GPUs, which prompted many on-line AI circles to more or less freakout. To translate - they’re still very strong GPUs, but prohibit the efficient configurations you can use them in.

How to use the deepseek-coder-instruct to complete the code? Click right here to entry Code Llama. Here are some examples of how to use our mannequin. You'll be able to set up it from the supply, use a package deal supervisor like Yum, Homebrew, apt, and many others., or use a Docker container. This is particularly beneficial in industries like finance, cybersecurity, and manufacturing. It virtually feels like the character or submit-coaching of the model being shallow makes it really feel just like the mannequin has more to offer than it delivers. DeepSeek Coder gives the ability to submit present code with a placeholder, in order that the mannequin can complete in context. PCs gives a highly environment friendly engine for mannequin inferencing, unlocking a paradigm the place generative AI can execute not simply when invoked, but allow semi-repeatedly operating companies. The mannequin is accessible under the MIT licence. The Mixture-of-Experts (MoE) approach used by the mannequin is vital to its efficiency. The start-up had turn into a key participant in the "Chinese Large-Model Technology Avengers Team" that will counter US AI dominance, said one other. Compared to Meta’s Llama3.1 (405 billion parameters used unexpectedly), DeepSeek V3 is over 10 instances extra efficient but performs better. In 2019 High-Flyer turned the primary quant hedge fund in China to lift over 100 billion yuan ($13m).

- 이전글9 . What Your Parents Taught You About Prams Pushchairs 25.02.01

- 다음글20 Things You Need To Be Educated About Clearwater Accident Lawyer 25.02.01

댓글목록

등록된 댓글이 없습니다.