Getting One of the Best Deepseek

페이지 정보

본문

AI race and whether or not the demand for AI chips will maintain. Current large language fashions (LLMs) have greater than 1 trillion parameters, requiring multiple computing operations across tens of thousands of high-efficiency chips inside a knowledge center. Secondly, programs like this are going to be the seeds of future frontier AI methods doing this work, as a result of the methods that get built here to do issues like aggregate information gathered by the drones and construct the live maps will serve as input knowledge into future methods. We tried. We had some ideas that we wanted people to go away these companies and start and it’s really arduous to get them out of it. You see an organization - people leaving to start those kinds of firms - however outdoors of that it’s arduous to convince founders to leave. There’s not leaving OpenAI and saying, "I’m going to begin an organization and dethrone them." It’s form of crazy. Like all laboratory, DeepSeek certainly has different experimental items going within the background too. They're people who have been beforehand at large companies and felt like the corporate could not move themselves in a way that is going to be on track with the brand deep seek new know-how wave.

They end up starting new companies. Based on our experimental observations, we have discovered that enhancing benchmark efficiency utilizing multi-choice (MC) questions, comparable to MMLU, CMMLU, and C-Eval, is a relatively simple job. I also use it for normal purpose tasks, such as text extraction, primary knowledge questions, etc. The principle reason I exploit it so heavily is that the usage limits for GPT-4o still seem considerably greater than sonnet-3.5. DeepSeek reports that the model’s accuracy improves dramatically when it uses more tokens at inference to purpose a few prompt (though the online person interface doesn’t allow users to regulate this). Far from exhibiting itself to human tutorial endeavour as a scientific object, AI is a meta-scientific control system and an invader, with all of the insidiousness of planetary technocapital flipping over. They can "chain" together multiple smaller fashions, each trained below the compute threshold, to create a system with capabilities comparable to a large frontier model or just "fine-tune" an current and freely obtainable advanced open-source mannequin from GitHub. It almost feels like the character or publish-training of the model being shallow makes it really feel just like the model has extra to supply than it delivers.

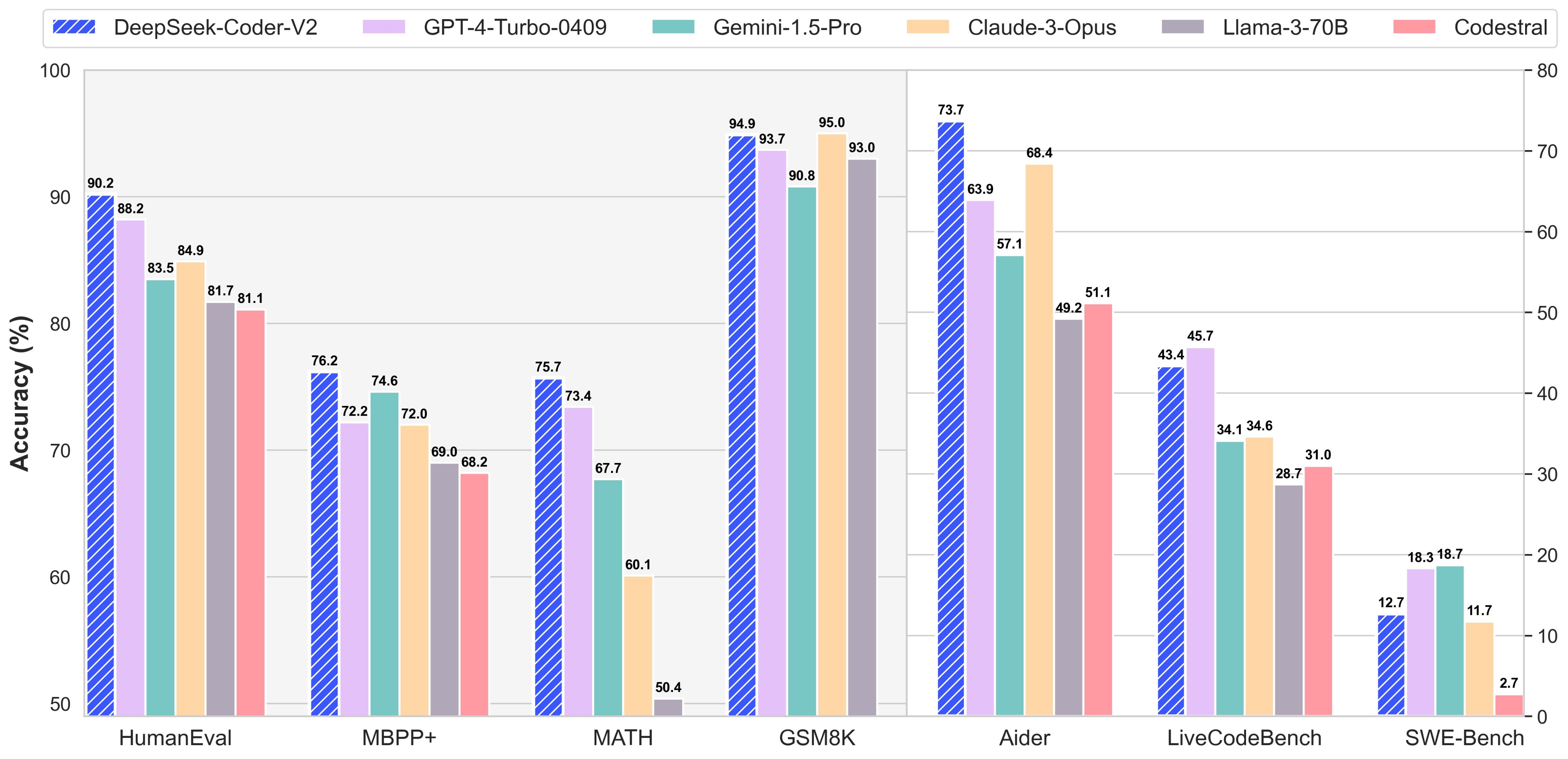

DeepSeek is the name of a free deepseek AI-powered chatbot, which appears, feels and works very much like ChatGPT. You go on ChatGPT and it’s one-on-one. It’s laborious to filter it out at pretraining, particularly if it makes the mannequin higher (so that you may want to show a blind eye to it). Some individuals won't want to do it. If you need to make use of DeepSeek more professionally and use the APIs to connect to DeepSeek for tasks like coding in the background then there's a charge. DeepSeek-R1 achieves performance comparable to OpenAI-o1 across math, code, and reasoning tasks. We attribute the state-of-the-art efficiency of our models to: (i) largescale pretraining on a big curated dataset, which is particularly tailor-made to understanding humans, (ii) scaled highresolution and excessive-capability vision transformer backbones, and (iii) excessive-quality annotations on augmented studio and artificial knowledge," Facebook writes. deepseek ai china's aggressive performance at relatively minimal cost has been recognized as probably challenging the global dominance of American A.I. Tracking the compute used for a challenge just off the final pretraining run is a really unhelpful way to estimate precise cost.

In case you loved this short article and you would love to receive more details about ديب سيك please visit our own page.

- 이전글سعر متر الالوميتال للشبابيك وللمطابخ اليوم فى مصر 2025 اخر تحديث 25.02.01

- 다음글Undeniable Proof That You Need Repair French Doors 25.02.01

댓글목록

등록된 댓글이 없습니다.