Deepseek Sources: google.com (webpage)

페이지 정보

본문

Furthermore, DeepSeek presents at least two kinds of potential "backdoor" dangers. Because all consumer knowledge is saved in China, the most important concern is the potential for an information leak to the Chinese government. And although the DeepSeek mannequin is censored in the version hosted in China, in accordance with native legal guidelines, Zhao pointed out that the fashions which are downloadable for self internet hosting or hosted by western cloud suppliers (AWS/Azure, etc.) are usually not censored. They're reinvigorating the open source AI movement globally by making a true frontier stage model accessible with full open MIT license. The underlying model structure and model weights of DeepSeek’s R1 reasoning mannequin are totally open-source and distributed beneath a permissive MIT license. "It’s mindboggling that we are unknowingly allowing China to survey Americans and we’re doing nothing about it," stated Ivan Tsarynny, CEO of Feroot. "It’s hard to consider that something like this was unintended. DeepSeek's downloadable model shows fewer indicators of built-in censorship in distinction to its hosted fashions, which appear to filter politically sensitive matters like Tiananmen Square.

Furthermore, DeepSeek presents at least two kinds of potential "backdoor" dangers. Because all consumer knowledge is saved in China, the most important concern is the potential for an information leak to the Chinese government. And although the DeepSeek mannequin is censored in the version hosted in China, in accordance with native legal guidelines, Zhao pointed out that the fashions which are downloadable for self internet hosting or hosted by western cloud suppliers (AWS/Azure, etc.) are usually not censored. They're reinvigorating the open source AI movement globally by making a true frontier stage model accessible with full open MIT license. The underlying model structure and model weights of DeepSeek’s R1 reasoning mannequin are totally open-source and distributed beneath a permissive MIT license. "It’s mindboggling that we are unknowingly allowing China to survey Americans and we’re doing nothing about it," stated Ivan Tsarynny, CEO of Feroot. "It’s hard to consider that something like this was unintended. DeepSeek's downloadable model shows fewer indicators of built-in censorship in distinction to its hosted fashions, which appear to filter politically sensitive matters like Tiananmen Square.

DeepSeek represents the most recent problem to OpenAI, which established itself as an trade chief with the debut of ChatGPT in 2022. OpenAI has helped push the generative AI business forward with its GPT household of models, in addition to its o1 class of reasoning fashions. 2. Set up your growth setting with crucial libraries corresponding to Python’s requests or openai bundle. Note that you don't must and should not set handbook GPTQ parameters any extra. Even discussing a carefully scoped set of risks can raise difficult, unsolved technical questions. If you’re on the lookout for a more finances-friendly possibility with sturdy technical capabilities, DeepSeek might be an incredible match. Meaning taking the entire techstack into consideration and looking out at the context of what you’re making an attempt to construct. Comparing DeepSeek and ChatGPT involves taking a look at their goals, technologies, and functions. DeepSeek provides open-supply AI models, which suggests anyone can use, modify, and combine them into their purposes. Open-Source AI: DeepSeek makes its AI models, code, and training details open to the general public so that anybody can use, modify, or study from them.

DeepSeek represents the most recent problem to OpenAI, which established itself as an trade chief with the debut of ChatGPT in 2022. OpenAI has helped push the generative AI business forward with its GPT household of models, in addition to its o1 class of reasoning fashions. 2. Set up your growth setting with crucial libraries corresponding to Python’s requests or openai bundle. Note that you don't must and should not set handbook GPTQ parameters any extra. Even discussing a carefully scoped set of risks can raise difficult, unsolved technical questions. If you’re on the lookout for a more finances-friendly possibility with sturdy technical capabilities, DeepSeek might be an incredible match. Meaning taking the entire techstack into consideration and looking out at the context of what you’re making an attempt to construct. Comparing DeepSeek and ChatGPT involves taking a look at their goals, technologies, and functions. DeepSeek provides open-supply AI models, which suggests anyone can use, modify, and combine them into their purposes. Open-Source AI: DeepSeek makes its AI models, code, and training details open to the general public so that anybody can use, modify, or study from them.

Its public launch gives the first look into the details of how these reasoning fashions work. This stage used three reward models. Ensuring that DeepSeek AI’s fashions are used responsibly is a key problem. What DeepSeek's emergence really changes is the landscape of model entry: Their fashions are freely downloadable by anyone. DeepSeek does highlight a new strategic challenge: What occurs if China becomes the leader in offering publicly available AI models that are freely downloadable? That means DeepSeek's efficiency beneficial properties are not a great leap, but align with business trends. Second, new fashions like DeepSeek's R1 and OpenAI's o1 reveal one other crucial role for compute: These "reasoning" models get predictably higher the extra time they spend pondering. Emergent habits community. DeepSeek's emergent habits innovation is the invention that advanced reasoning patterns can develop naturally by reinforcement learning without explicitly programming them. "Due to the excessive excessive costs of pretraining frontier models the previous few years, educational institutions have been for the most half excluded from the innovation process prematurely AI, however with the gift of Deepseek making such a sophisticated reasoning mannequin out there to the world with full source, weights, methodology and free MIT license, we now enable a whole bunch of hundreds of researchers in small college labs or even at residence to partake in bringing progress to the sector.

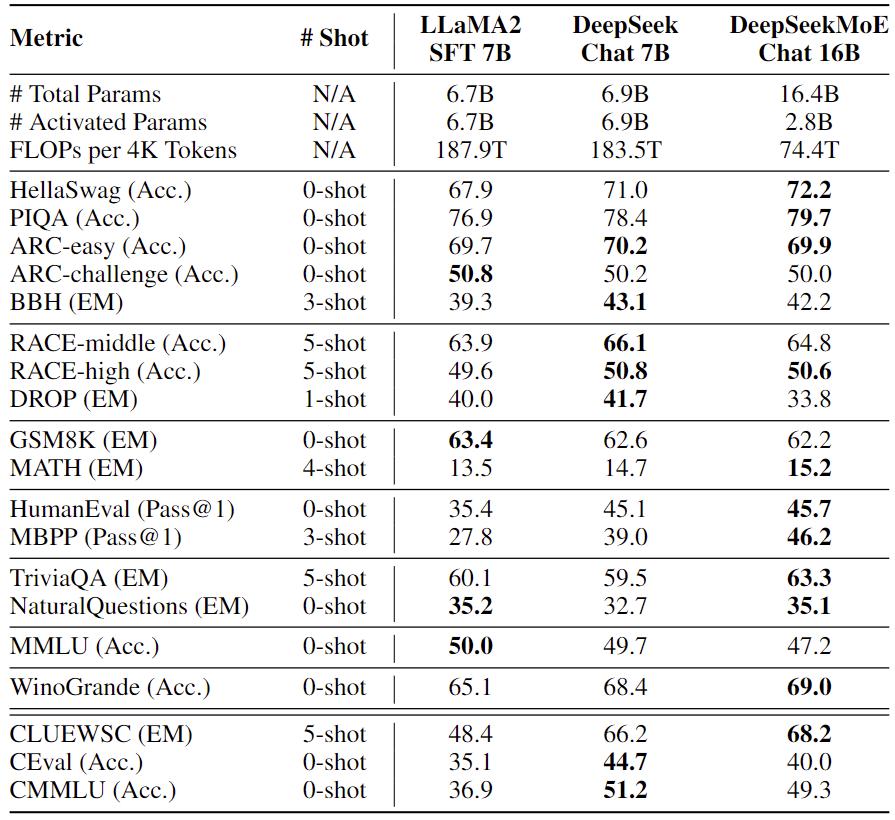

This is particularly useful for customers seeking the latest news, traits, or updates in their field. Priced at simply 2 RMB per million output tokens, this model offered an reasonably priced answer for customers requiring large-scale AI outputs. Users should verify essential particulars from reliable sources. Note that the GPTQ calibration dataset is not the same as the dataset used to prepare the mannequin - please refer to the unique model repo for particulars of the training dataset(s). LLM: Support DeepSeek-V3 model with FP8 and BF16 modes for tensor parallelism and pipeline parallelism. Aside from customary strategies, vLLM affords pipeline parallelism permitting you to run this mannequin on multiple machines connected by networks. DeepSeek V3 surpasses other open-source models throughout a number of benchmarks, delivering performance on par with top-tier closed-source models. Like in earlier versions of the eval, models write code that compiles for Java extra usually (60.58% code responses compile) than for Go (52.83%). Additionally, it seems that simply asking for Java results in additional valid code responses (34 models had 100% legitimate code responses for Java, only 21 for Go).

If you have any sort of inquiries regarding where and exactly how to utilize ديب سيك شات, you can contact us at our website.

- 이전글A Peek Inside The Secrets Of Cut Car Keys 25.02.07

- 다음글What You Should Be Focusing On Improving Robotic Cleaner And Mop 25.02.07

댓글목록

등록된 댓글이 없습니다.